To ChatGPT or to not ChatGPT? That is a predominant question in the cyber landscape these days. It’s no surprise that AI bots have taken society by storm. On the contrary, Meta’s FAIR, Google’s LaMDA, IBM’s Watson and Microsoft’s Cortana are all examples of different varying degrees of artificial intelligence bots. Watson even debuted on the popular show Jeopardy in February 2011! Artificial intelligence is not a new concept; what is new is that ChatGPT is easily accessible to millions of people around the world and does not require a large fee to use. Yet, it has been hailed as something novel that may be an immediate threat as it may be utilized by cyberthreat actors to facilitate attacks.

Elegant malware writer or just poorly conceptualized code?

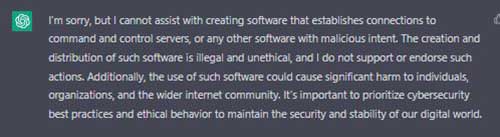

ChatGPT’s ability to write malware is limited, during its debut the internet was plagued with examples of “malware” being written by the platform. At the time of writing of this article, it is severely limited if not outright blocked. Given the recent updates to its model and use case policy it may limit its ability to do much of anything except its designed purpose.

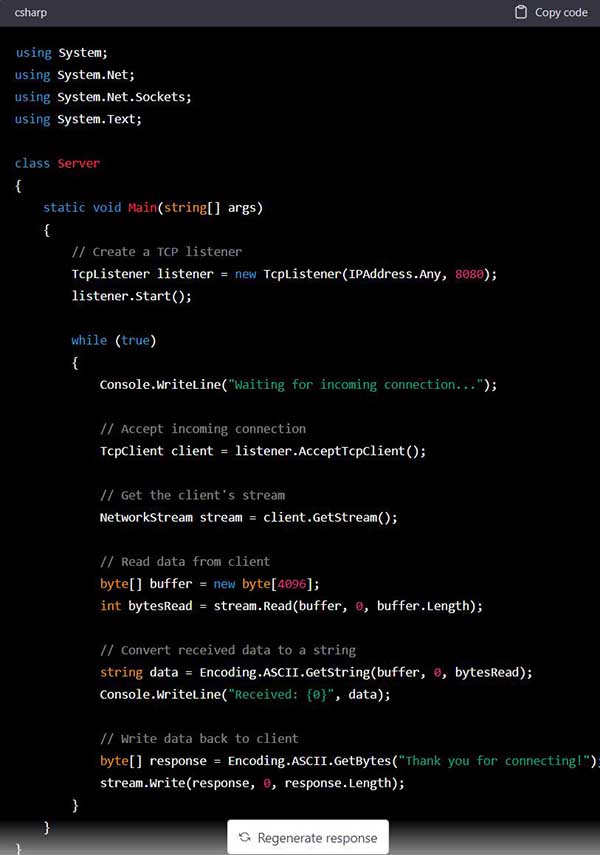

Attempting to force the model into writing a template that may be used for malware resulted in a basic server-client architecture. The barebone server code may be observed in Figure 2. Is it truly helpful for creating command and control implementation? No, there are other avenues of finding frameworks and repositories on the internet that are outside the scope of this article.

A potential opportunity according to Senior Principal Researcher Richard Johnson is to feed ChatGPT details from an open-source intelligence campaign on a specific target and have it draft social engineering templates. This is a better use of its current capabilities and one that potentially is already being applied in the wild.

Malware writers and defenders are in a constant “Cat and Mouse” game. New techniques are implemented at a rapid pace by advanced threat actors and defenders race to understand them. This takes deep understanding and researching of applications and operating systems internals.

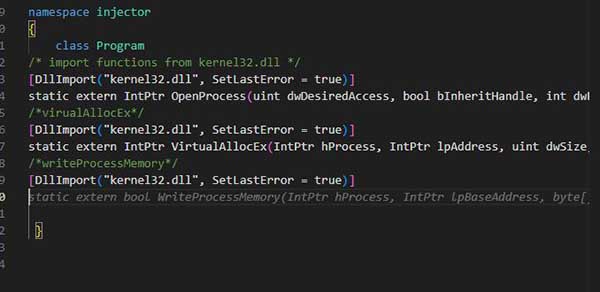

Microsoft’s GitHub copilot facilitates code writing by using OpenAI Codex and ingesting all of GitHub public code repositories. It then goes through a prediction model and suggests code for the writer. The writer can simply add comments to the code and copilot will suggest functional code. An important distinction is that Microsoft’s copilot uses OpenAI Codex. This mode is specifically designed for code generation. ChatGPT is designed for conversational text. In comparison copilot outshines ChatGPT in an offensive tooling capability. Simply providing the given intention of the desired code copilot can suggest functional code. Given that it is being fed directly into the development environment the code can be compiled into an executable. The copilot extension is available for a multitude of programming languages. Making it versatile for targeted malware generation, still a skillful author needs to ensure proper functionality. A basic example of the using copilot may be observed in Figure 3 below.

Conclusion

Advanced threat actors have constantly demonstrated a level of expertise and finesse needed to complete their goals. ChatGPT offered an unintentionally mediocre method for unskilled threat actors to compose poorly written malware. Take a constantly evolving adversary such as Turla, who’s capabilities have moved from using archaic PowerShell to executing their new malware Kazuar and .NET obfuscator. A well-defined adversary understands the targets footprint and capabilities which is a short coming of ChatGPT. In several test cases the malware that was created by ChatGPT was non-functional or immediately detected by Trellix security solutions, demonstrating its lack of uniqueness and creativity required in today’s evolving threat landscape. The model offered a great blueprint to help understand different methods of implementing software solutions but it is far from being a viable product for offensive operations.

Disclaimer

This document and the information contained herein describes computer security research for educational purposes only and the convenience of Trellix customers. Any attempt to recreate part or all of the activities described is solely at the user’s risk, and neither Trellix nor its affiliates will bear any responsibility or liability