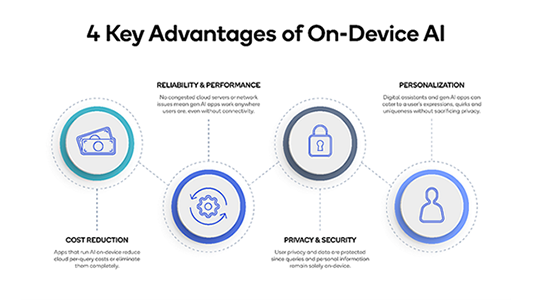

Qualcomm Technologies, Inc. and Meta are working to optimize the execution of Meta’s Llama 2 large language models directly on-device – without relying on the sole use of cloud services. The ability to run generative AI models like Llama 2 on devices such as smartphones, PCs, VR/AR headsets, and vehicles allows developers to save on cloud costs, and to provide users with private, more reliable, and personalized experiences.

As a result, Qualcomm Technologies plans to make available on-device Llama 2-based AI implementations to enable the creation of new and exciting AI applications. This will allow customers, partners, and developers to build use cases, such as intelligent virtual assistants, productivity applications, content creation tools, entertainment, and more. These new on-device AI experiences, powered by Snapdragon®, can work in areas with no connectivity or even in airplane mode.

“We applaud Meta’s approach to open and responsible AI and are committed to driving innovation and reducing barriers-to-entry for developers of any size by bringing generative AI on-device,” said Durga Malladi, senior vice president and general manager of technology, planning and edge solutions businesses, Qualcomm Technologies, Inc. “To effectively scale generative AI into the mainstream, AI will need to run on both the cloud and devices at the edge, such as smartphones, laptops, vehicles, and IoT devices.”

Meta and Qualcomm Technologies have a longstanding history of working together to drive technology innovation and deliver the next generation of premium device experiences. The Companies’ current collaboration to support the Llama ecosystem span across research and product engineering efforts. Qualcomm Technologies’ leadership in on-device AI uniquely positions it to support the Llama ecosystem. The Company has an unmatched footprint at the edge—with billions of smartphones, vehicles, XR headsets and glasses, PCs, IoT, and more, being powered by its industry-leading AI hardware and software solutions—enabling the opportunity for generative AI to scale.

Qualcomm Technologies is scheduled to make available Llama 2-based AI implementation on devices powered by Snapdragon starting from 2024 onwards. Developers can start today optimizing applications for on-device AI using the Qualcomm® AI Stack – a dedicated set of tools that allow to process AI more efficiently on Snapdragon, making on-device AI possible even in small, thin, and light devices. For more updates, subscribe to our monthly developers newsletter.

Cautionary Note Regarding Forward-Looking Statements

In addition to historical information, this news release contains forward-looking statements that are inherently subject to risks and uncertainties, including but not limited to statements regarding our collaboration with Meta and the benefits and impact thereof, our plans to make available Llama 2-based AI implementations on devices powered by Snapdragon and the timing thereof, and the benefits and performance of on-device AI. Forward-looking statements are generally identified by words such as “estimates,” “guidance,” “expects,” “anticipates,” “intends,” “plans,” “believes,” “seeks” and similar expressions. Such forward-looking statements speak only as of the date of this news release, and are based on our current assumptions, expectations and beliefs, and information currently available to us. These forward-looking statements are not guarantees of future performance, and actual results may differ materially from those referred to in the forward-looking statements due to a number of important factors, including but not limited to the risks described in our most recent Annual Report on Form 10-K and subsequent Quarterly Reports on Form 10-Q filed with the U.S. Securities and Exchange Commission (SEC). Our reports filed with the SEC are available on our website at www.qualcomm.com. We undertake no obligation to update, or continue to provide information with respect to, any forward-looking statement or risk factor, whether as a result of new information, future events or otherwise.