Introduction

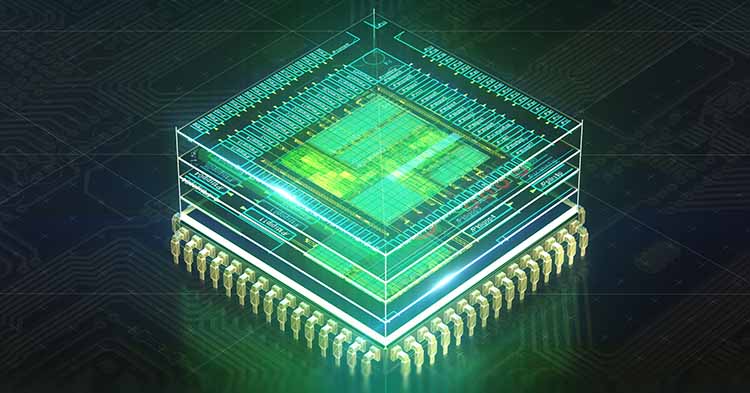

Artificial Intelligence (AI) is transforming industries, powering everything from self-driving cars and facial recognition to medical diagnostics and real-time language translation. Behind the scenes, this revolution is being accelerated by a new breed of hardware: AI-specific chips. These purpose-built processors are designed to handle the immense data and complex calculations that AI workloads demand.

In this blog, we’ll explore why AI-specific chips are gaining momentum, how they work, the different types available, their real-world applications, and what the future holds for this rapidly evolving space.

Why General-Purpose Chips Aren’t Enough

Traditional CPUs (Central Processing Units) have been the backbone of computing for decades. While they’re versatile and powerful, they struggle with the parallel processing demands of modern AI algorithms. AI tasks—particularly those involving deep learning—demand extremely high throughput due to the heavy use of large-scale matrix multiplications.

This is where general-purpose chips fall short.

GPUs (Graphics Processing Units) improved the situation by offering parallel computing capabilities, originally developed for rendering images. But even GPUs, while effective, are not optimized for all types of AI tasks. Enter AI-specific chips, designed from the ground up to accelerate machine learning and inference tasks.

What Are AI-Specific Chips?

AI-specific chips are specialized processors tailored to handle tasks involved in training and inference of AI models. They include features like high-speed memory access, parallel architecture, and support for tensor computations.

Key characteristics include:

- High Throughput: Capable of handling billions of operations per second.

- Low Latency: Crucial for real-time AI systems such as autonomous vehicles, where rapid response times are vital.

- Power Efficiency: Optimized to deliver more performance per watt than traditional chips.

- Custom Architectures: Designed specifically to handle neural networks and other AI model workloads efficiently.

Types of AI-Specific Chips

- TPUs (Tensor Processing Units)

- Developed by Google.

- Specially designed for neural network computations.

- Widely used in data centers for AI training and inference.

- NPUs (Neural Processing Units)

- Found in smartphones and edge devices.

- Optimize on-device AI tasks like face detection and voice recognition.

- Example: Apple’s Neural Engine and Huawei’s Kirin chipsets.

- FPGAs (Field-Programmable Gate Arrays)

- Reconfigurable chips that can be customized for specific AI workloads.

- Balance between performance and flexibility.

- Used in applications where adaptability is key.

- ASICs (Application-Specific Integrated Circuits)

- Custom-designed chips for specific AI tasks.

- Offer maximum performance and efficiency.

- Common in high-volume applications like autonomous vehicles.

- Edge AI Chips

- Optimized for on-device data processing in equipment such as cameras, drones, and robots.

- Reduce dependency on cloud infrastructure.

- Enable faster decision-making and enhance privacy.

Real-World Applications

- Smartphones

- AI chips power real-time image enhancement, voice recognition, and smart assistant functionalities.

- Example: Google Pixel phones use the Titan M chip for AI-based security.

- Autonomous Vehicles

- Self-driving systems rely on AI chips for processing data from sensors in real-time.

- Tesla’s custom AI chip processes over 70,000 frames per second for navigation.

- Healthcare

- AI chips accelerate diagnosis by analyzing medical images and patient data.

- Used in portable diagnostic devices for real-time analysis.

- Smart Cameras and Surveillance

- Edge AI chips help in recognizing faces, detecting motion, and analyzing behavior patterns on the fly.

- Cloud AI Services

- Data centers use TPUs and GPUs to train large-scale AI models.

- Powering services like Google Translate, Amazon Alexa, and ChatGPT.

Benefits of AI-Specific Chips

Speed: Faster training and inference times.

- Efficiency: Delivers the same performance with reduced power consumption.

- Scalability: Suited for both small devices and massive data centers.

- Accuracy: Enhanced precision in AI tasks due to optimized hardware.

- Security: Processing data directly on the device minimizes transmission, enhancing privacy and protection.

Challenges and Considerations

- High Development Costs: Designing and fabricating AI chips requires significant investment.

- Hardware Fragmentation: Many chip designs lead to compatibility and integration issues.

- Rapid Obsolescence: Fast-paced innovation means shorter product life cycles.

- Thermal Management: High-performance chips need advanced cooling systems.

- Ecosystem Support: Software and toolchains must evolve alongside hardware.

The Future of AI-Specific Chips

The demand for smarter, faster, and more energy-efficient AI hardware is only increasing. Emerging trends influencing the future include:

- 3D Chip Stacking: For higher performance in smaller packages.

- Quantum AI Processors: Though in early stages, they promise exponential speedups.

- Federated Learning Support: Chips that can handle decentralized learning efficiently.

- Open Source Hardware: Platforms like RISC-V enabling customizable AI chip designs.

- AI-on-Edge Expansion: Growth of IoT and wearables will drive demand for compact, powerful AI chips.