In recent years, there has been a noticeable trend in optical transceiver technology, moving toward bringing the transceiver closer to the ASIC. Traditionally, pluggable optics—optical modules inserted and removed from the front panel of a switch—have been located near the edge of the printed circuit board (PCB). These pluggable optics are widely used in data center networks to interconnect switches and servers. While they offer flexibility, scalability, and easy upgrades, they come with significant challenges, particularly high power consumption and limited bandwidth density.

To overcome these limitations, the industry is experiencing a paradigm shift. Optical transceivers are being brought closer to the ASIC, with the goal of shortening the copper channel required for electrical signal transmission. However, despite advancements in reducing the length of the copper channel, the challenges posed by deviating from the industry-standard pluggable architecture are not yet fully resolved. As a result, the industry may move directly toward more advanced solutions, like Co-Packaged Optics (CPO). IDTechEx’s report, “Co-Packaged Optics (CPO) 2025-2035: Technologies, Market, and Forecasts”, explores the latest advancements in CPO technology. It analyzes key technical innovations and packaging trends, evaluates major industry players, and provides detailed market forecasts, highlighting how CPO adoption will transform future data center architecture.

The rise of co-packaged optics (CPO)

Co-Packaged Optics (CPO) represents a significant leap in data transmission technology. CPO integrates the optical engine and the switching silicon onto the same substrate. This design eliminates the need for signals to traverse the PCB, further reducing the electrical channel path and significantly improving performance.

Reducing the electrical channel path is crucial because the core of data transfer relies on the copper-based SerDes (Serializer/Deserializer) circuitry, which connects switching ASIC to pluggable transceivers. As data demands grow, SerDes technology has evolved to enable faster transmission, but faster ASICs require better copper connections—either through more channels or higher speeds. However, as link density and bandwidth increase, a significant portion of system power—and thus cost—is consumed by driving signals from the ASIC to optical interconnects at the edge of the rack. The size limitations of ASIC ball grid array (BGA) packages, due to warpage concerns, require higher SerDes speeds to support increased bandwidth. However, this also results in higher power consumption because greater channel loss occurs at higher frequencies.

One of the key solutions to these challenges is to reduce the distance between the ASIC and the optical transceiver. A significant portion of system power is consumed in driving data signals from the ASIC to the optical interconnects at the edge of the rack. By bringing the optical transceivers closer to the ASIC, several advantages can be realized:

- Reduced Signal Losses: Shortening the electrical path between the ASIC and the optical interconnect minimizes signal degradation.

- Lower Power Consumption: Reducing the distance allows for the use of lower-power SerDes options, leading to overall lower system power consumption.

- Enhanced Efficiency and Performance: By cutting down the power required for data transmission, system efficiency and performance are significantly improved.

- Scalability: CPO technology supports future scalability for high-bandwidth systems, making it ideal for data centers that need to meet growing data demands.

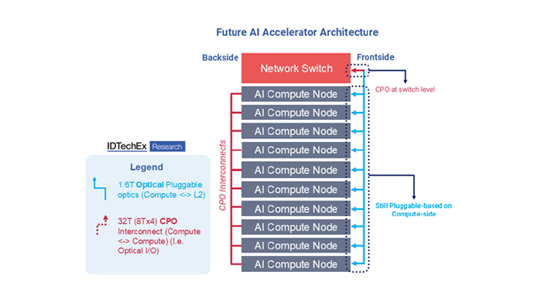

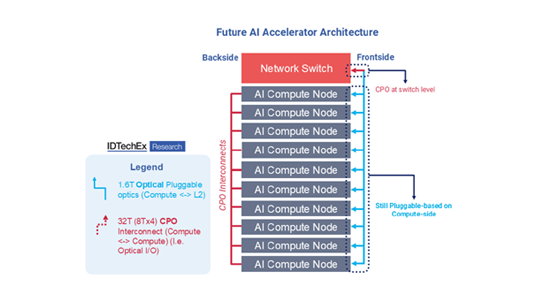

How CPO will shape interconnect architecture for AI

CPO is set to transform interconnect architecture for AI. Here, IDTechEx uses Nvidia’s cutting-edge DGX NVL72 server as an example to explain how IDTechEx predicts the next generation AI architecture. Nvidia, the market leader in AI accelerators, has designed the DGX NVL72 to redefine AI performance standards, supporting up to 27 trillion parameters—far surpassing ChatGPT 4’s 1.5 trillion parameters.

The DGX NVL72 contains 18 compute nodes, each equipped with four Blackwell GPUs and two Grace CPUs, along with nine NVLink switches. Modern AI accelerator architectures, such as this, employ multiple communication networks to manage data flow:

- Backside Compute Network: Inthe DGX NVL72 architecture, each compute node connects to an L1 compute switch via Nvidia’s NVLink Spine, a high-speed copper interconnect offering 1.8T bidirectional bandwidth through 18 lanes of 100G, using 36 copper wires per connection. L1 switches are similarly interconnected, resulting in a total of 5184 copper wires across the system. To maintain signal integrity over these distances, retimers are used at the switches, though they introduce latency and bandwidth limitations, especially at higher speeds like 100G per lane. Additionally, copper links like these, while cost-effective, face signal degradation issues such as channel loss and clock jitter over longer distances, which become more pronounced as bandwidth demands increase.

- Optical interconnects present a compelling alternative to copper, offering much higher bandwidth density and greater efficiency over long distances—critical for AI workloads involving massive data transfers between GPUs. Looking ahead, it is expected that copper interconnects will be replaced by Co-Packaged Optics (CPO), enabling direct connections between compute nodes and eliminating the need for L1 compute switches in the backside network.

- Frontside Network: Each AI compute node currently connects to an L2 switch using pluggable optical transceivers at speeds ranging from 400G to 800G. This optical setup facilitates long-distance data transmission between racks, offering lower signal loss and greater bandwidth density than copper. In the future, CPO is expected to be adopted at the network switch level, though pluggable optics may remain at the compute node level due to their flexibility and compatibility with various system configurations.

Ultimately, CPO will reshape AI interconnect architecture by improving data movement, reducing bottlenecks, and allowing for higher efficiency and scalability in next-generation AI systems. The future will likely see direct optical connections, eliminating compute switches and increasing bandwidth for AI workloads, though the complexity of connections will also rise.

IDTechEx’s report on the topic, “Co-Packaged Optics (CPO) 2025-2035: Technologies, Market, and Forecasts”, offers an extensive exploration into the latest advancements within co-packaged optics technology. The report delves deep into key technical innovations and packaging trends, comprehensively analyzing the entire value chain. It thoroughly evaluates the activities of major industry players and delivers detailed market forecasts, projecting how the adoption of CPO will reshape the landscape of future data center architecture.

Central to the report is the recognition of advanced semiconductor packaging as the cornerstone of co-packaged optics technology. IDTechEx places significant emphasis on understanding the potential roles that various semiconductor packaging technologies may play within the realm of CPO.

To find out more about this report, including downloadable sample pages, please visit www.IDTechEx.com/CPO.

For the full portfolio of semiconductors, computing and AI market research available from IDTechEx, please visit www.IDTechEx.com/Research/AI.